A Benchmark for Inpainting of Clothing Images with Irregular Holes

Paper and Code

Jul 09, 2020

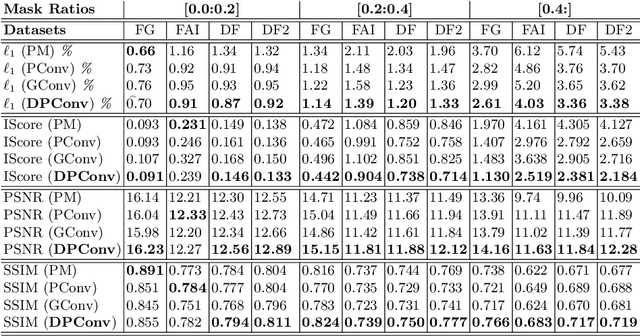

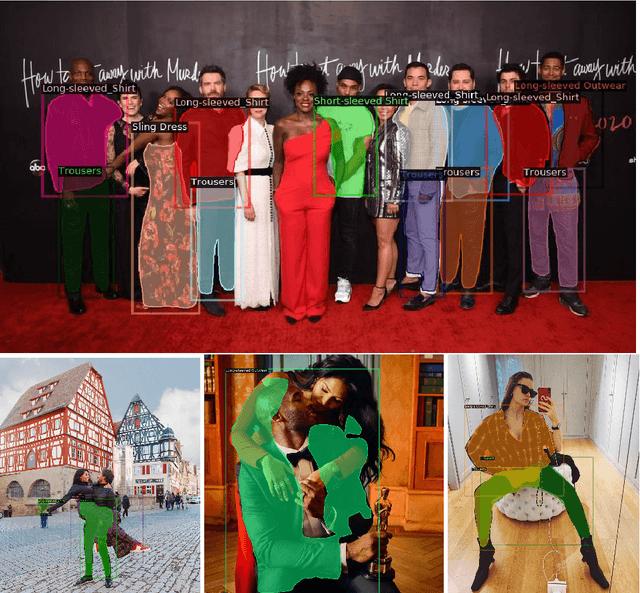

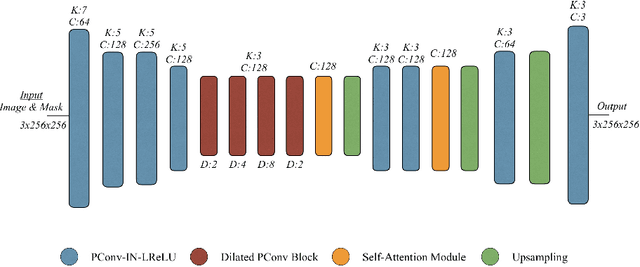

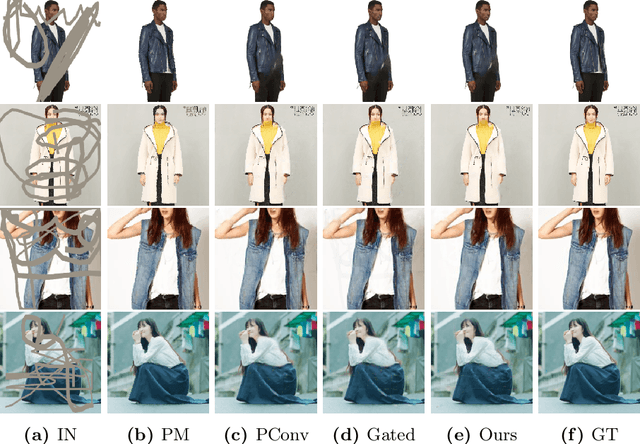

Fashion image understanding is an active research field with a large number of practical applications for the industry. Despite its practical impacts on intelligent fashion analysis systems, clothing image inpainting has not been extensively examined yet. For that matter, we present an extensive benchmark of clothing image inpainting on well-known fashion datasets. Furthermore, we introduce the use of a dilated version of partial convolutions, which efficiently derive the mask update step, and empirically show that the proposed method reduces the required number of layers to form fully-transparent masks. Experiments show that dilated partial convolutions (DPConv) improve the quantitative inpainting performance when compared to the other inpainting strategies, especially it performs better when the mask size is 20% or more of the image. \keywords{image inpainting, fashion image understanding, dilated convolutions, partial convolutions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge