Mark d'Inverno

Modelling Human Values for AI Reasoning

Feb 09, 2024Abstract:One of today's most significant societal challenges is building AI systems whose behaviour, or the behaviour it enables within communities of interacting agents (human and artificial), aligns with human values. To address this challenge, we detail a formal model of human values for their explicit computational representation. To our knowledge, this has not been attempted as yet, which is surprising given the growing volume of research integrating values within AI. Taking as our starting point the wealth of research investigating the nature of human values from social psychology over the last few decades, we set out to provide such a formal model. We show how this model can provide the foundational apparatus for AI-based reasoning over values, and demonstrate its applicability in real-world use cases. We illustrate how our model captures the key ideas from social psychology research and propose a roadmap for future integrated, and interdisciplinary, research into human values in AI. The ability to automatically reason over values not only helps address the value alignment problem but also facilitates the design of AI systems that can support individuals and communities in making more informed, value-aligned decisions. More and more, individuals and organisations are motivated to understand their values more explicitly and explore whether their behaviours and attitudes properly reflect them. Our work on modelling human values will enable AI systems to be designed and deployed to meet this growing need.

The pop song generator: designing an online course to teach collaborative, creative AI

Jun 15, 2023

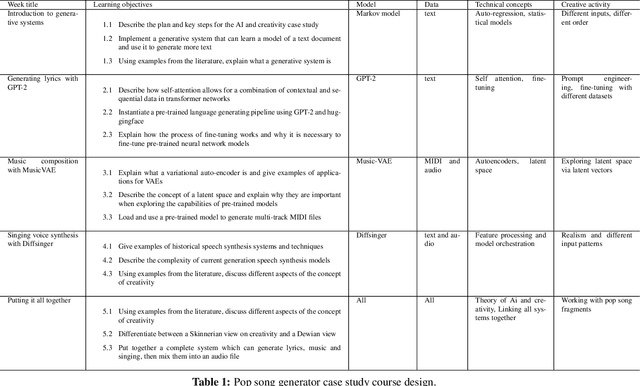

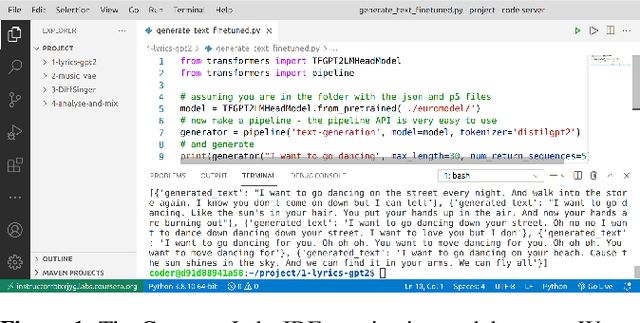

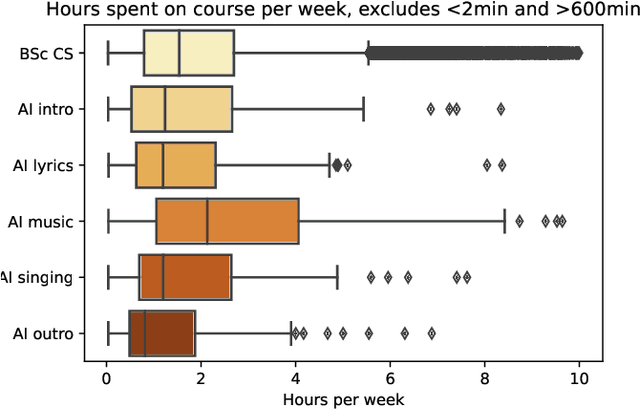

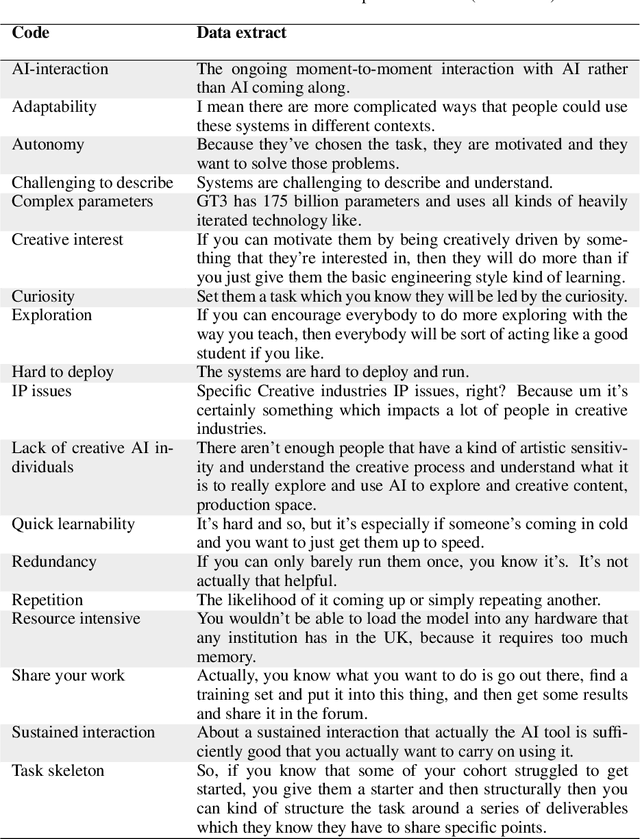

Abstract:This article describes and evaluates a new online AI-creativity course. The course is based around three near-state-of-the-art AI models combined into a pop song generating system. A fine-tuned GPT-2 model writes lyrics, Music-VAE composes musical scores and instrumentation and Diffsinger synthesises a singing voice. We explain the decisions made in designing the course which is based on Piagetian, constructivist 'learning-by-doing'. We present details of the five-week course design with learning objectives, technical concepts, and creative and technical activities. We explain how we overcame technical challenges to build a complete pop song generator system, consisting of Python scripts, pre-trained models, and Javascript code that runs in a dockerised Linux container via a web-based IDE. A quantitative analysis of student activity provides evidence on engagement and a benchmark for future improvements. A qualitative analysis of a workshop with experts validated the overall course design, it suggested the need for a stronger creative brief and ethical and legal content.

Human Values in Multiagent Systems

May 04, 2023Abstract:One of the major challenges we face with ethical AI today is developing computational systems whose reasoning and behaviour are provably aligned with human values. Human values, however, are notorious for being ambiguous, contradictory and ever-changing. In order to bridge this gap, and get us closer to the situation where we can formally reason about implementing values into AI, this paper presents a formal representation of values, grounded in the social sciences. We use this formal representation to articulate the key challenges for achieving value-aligned behaviour in multiagent systems (MAS) and a research roadmap for addressing them.

A computational framework of human values for ethical AI

May 04, 2023Abstract:In the diverse array of work investigating the nature of human values from psychology, philosophy and social sciences, there is a clear consensus that values guide behaviour. More recently, a recognition that values provide a means to engineer ethical AI has emerged. Indeed, Stuart Russell proposed shifting AI's focus away from simply ``intelligence'' towards intelligence ``provably aligned with human values''. This challenge -- the value alignment problem -- with others including an AI's learning of human values, aggregating individual values to groups, and designing computational mechanisms to reason over values, has energised a sustained research effort. Despite this, no formal, computational definition of values has yet been proposed. We address this through a formal conceptual framework rooted in the social sciences, that provides a foundation for the systematic, integrated and interdisciplinary investigation into how human values can support designing ethical AI.

Explainable Computational Creativity

May 11, 2022

Abstract:Human collaboration with systems within the Computational Creativity (CC) field is often restricted to shallow interactions, where the creative processes, of systems and humans alike, are carried out in isolation, without any (or little) intervention from the user, and without any discussion about how the unfolding decisions are taking place. Fruitful co-creation requires a sustained ongoing interaction that can include discussions of ideas, comparisons to previous/other works, incremental improvements and revisions, etc. For these interactions, communication is an intrinsic factor. This means giving a voice to CC systems and enabling two-way communication channels between them and their users so that they can: explain their processes and decisions, support their ideas so that these are given serious consideration by their creative collaborators, and learn from these discussions to further improve their creative processes. For this, we propose a set of design principles for CC systems that aim at supporting greater co-creation and collaboration with their human collaborators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge